Symptoms

In a Lync and Exchange UM environment (version doesn’t particularly matter in this case), voicemail messages were not being delivered. The voicemail folder on Exchange (C:\Program Files\Microsoft\Exchange Server\V15\UnifiedMessaging\voicemail) was filling up with hundreds of .txt (header files) and .wav (voicemail audio files).

Resolution

This issue is not necessarily new (Reference1 Reference2), but it didn’t immediately come up in search results. I also wanted to spend more time discussing why this issue happened and why it’s important to understand receive connector scoping.

This issue was caused by incorrectly modifying a receive connector on Exchange. Specifically, a custom connector used for application relay was modified so instead of only the individual IP addresses needed for relay (EX: Printers/Copiers/Scanners/3rd Party Applications requiring relay), the entire IP subnet was included in the Remote IP Ranges scoping. This ultimately meant that instead of Lync/ExchangeUM using the default receive connectors (which have the required “Exchange Server Authentication” enabled), they instead were using the custom application relay connector (which did not have Exchange Server Authentication enabled).

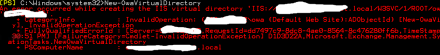

This resulted in the voicemail messages sitting in the voicemail folder and errors (Event ID 1423/1446/1335) being thrown in the Application log. The errors will state processing failed for the messages:

The Microsoft Exchange Unified Messaging service on the Mailbox server encountered an error while trying to process the message with header file “C:\Program Files\Microsoft\Exchange Server\V15\UnifiedMessaging\voicemail\<string>.txt”. Error details: “Microsoft.Exchange.UM.UMCore.SmtpSubmissionException: Submission to the Hub Transport server failed. The operation will be retried. —> Microsoft.Exchange.Net.ExSmtpClient.UnexpectedSmtpServerResponseException: Unexpected SMTP server response. Expected: 220, actual: 500, whole response: 500 5.3.3 Unrecognized command

It’s also possible that the voicemail messages will eventually be deleted due to having failed processing too many times (EventID 1335):

The Microsoft Exchange Unified Messaging service on the Mailbox server encountered an error while trying to process the message with header file “C:\Program Files\Microsoft\Exchange Server\V15\UnifiedMessaging\voicemail\<string>.txt”. The message will be deleted and the “MSExchangeUMAvailability: % of Messages Successfully Processed Over the Last Hour” performance counter will be decreased. Error details: “Microsoft.Exchange.UM.UMCore.ReachMaxProcessedTimesException: This message has reached the maximum processed count, “6”.

Unfortunately, once you see this message above (EventID 1335) the message cannot be recovered. When UM states the message will be deleted, it will in fact be deleted with no chance of recovery. If the issue had been going on for several days and this folder were part of your daily backup sets then you could technically restore the files and paste them into the current directory; where they would be processed. However, if you did not have a backup then these voicemails would be permanently lost.

Note: Certain failed voicemail messages can be found in the “C:\Program Files\Microsoft\Exchange Server\V15\UnifiedMessaging\badvoicemail” directory. However, as our failure was a permanent failure related to Transport, they did not get moved to the badvoicemail directory and instead were permanently deleted.

Background

I wanted to further explain how this issue happened, and hopefully clear up confusion around receive connector scoping. In our scenario, someone left a voicemail for an Exchange UM-enabled mailbox which was received and processed by Exchange. The header and audio files for this voicemail message were temporarily stored in the “C:\Program Files\Microsoft\Exchange Server\V15\UnifiedMessaging\voicemail” directory on the Exchange UM server. Our scenario involved Exchange 2013, but the same general logic would apply to Exchange 2007/2010/2016. UM would normally submit these voicemail messages to transport using one of the default Receive Connectors which would have “Exchange Server Authentication” enabled. These messages would then be delivered to the destination mailbox.

Our failure was a result of the UM services being directed to a Receive Connector which did not have the necessary authentication enabled on it (the custom relay connector which only had Anonymous authentication enabled). Under normal circumstances, this issue would probably be detected within a few hours (as users began complaining of not receiving voicemails) but in our case the change was made before the holidays and was not detected until this week (another reason to avoid IT changes before a long holiday). This resulted in the permanent Event 1335 failure noted above and the loss of the voicemail. Since this failure occurs before reaching transport, Safety Net will not be any help.

So let’s turn our focus to Receive Connector scoping, and specifically, defining the RemoteIPRange parameter. Remote IP Ranges define for which incoming IP address/addresses that connector is responsible for handling. Depending on the local listening port, local listening IP address, & RemoteIPRange configuration of each Receive Connector, the Microsoft Exchange Frontend Transport Service and Microsoft Exchange Transport Service will route incoming connections to the correct Receive Connector. The chosen connector then handles the connection accordingly, based on the connector’s configured authentication methods, permission groups, etc. A Receive Connector must have a unique combination of local listening port, local listening IP address, and Remote IP Address (RemoteIPRange) configuration. This means you can have multiple Receive Connectors with the same listening IP address and port (25 for instance) as long as each of their RemoteIPRange configurations are unique. You could also have the same RemoteIPRange configuration on multiple Receive Connectors if your port or listening IP are different; and so on.

The default Receive Connectors all have a default RemoteIPRange of 0.0.0.0-255.255.255.255 (all IPv4 addresses) and ::-ffff:ffff:ffff:ffff:ffff:ffff:ffff:ffff (all IPv6 addresses). The rule for processing RemoteIPRange configurations is that the most accurate configuration is used. Say I have two Receive Connectors in the below configuration:

Name: Default Receive Connector

Local Listening IP and Port (Bindings): 192.168.1.10:25

RemoteIPRange: 0.0.0.0-255.255.255.255

Name: ApplicationRelayConnector

Local Listening IP and Port (Bindings): 192.168.1.10:25

RemoteIPRange: 192.168.1.55

With this configuration, if an inbound connection on port 25 destined for 192.168.1.10 is created from 192.168.1.55, then ApplicationRelayConnector would be used and it’s settings would be applicable. If an inbound connection to 192.168.1.10:25 came from 192.168.1.200 then Default Receive Connector would instead be used.

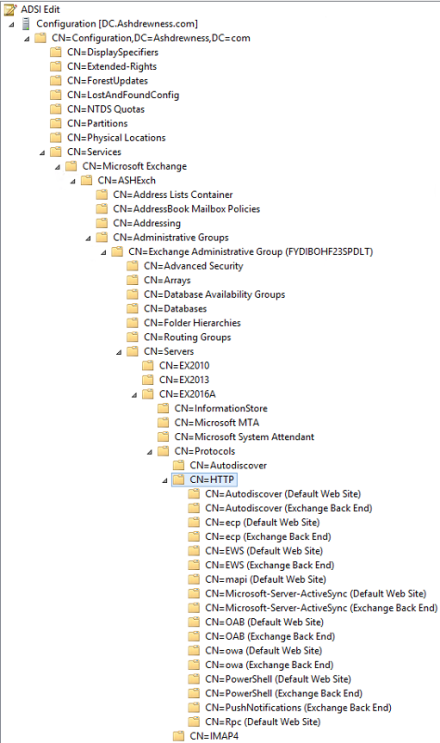

The below image was taken from the “Troubleshooting Transport” chapter of the Exchange Server Troubleshooting Companion, an eBook co-authored by Paul Cunningham and myself. It’s a great visual aid for understanding which Receive Connector will accept which connection from a given remote IP address. The chapter also contains great tips for troubleshooting connectors, mail flow, and Exchange in general.

So in my customer’s specific scenario, instead of defining individual IP addresses on their custom application relay receive connector, they instead defined the entire internal IP subnet (192.168.1.0/24). This resulted in not only the internal devices needing to relay hitting the custom application relay connector, but also the Exchange Server itself and the Lync server also hitting the custom application relay connector; thus breaking Exchange Server Authentication. As a best practice, you should always use individual IP addresses when configuring custom application relay connectors, so that you do not inadvertently break other Exchange communications. If this customer had multiple Exchange Servers, this change would have also broken Exchange server-to-server port 25 communications.